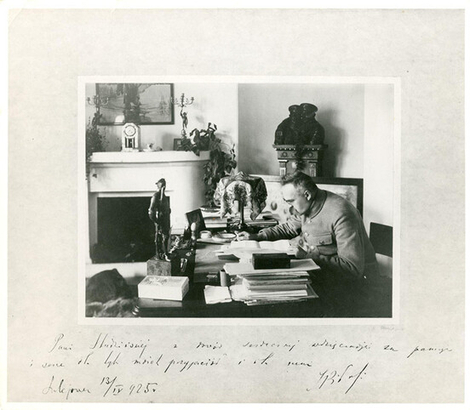

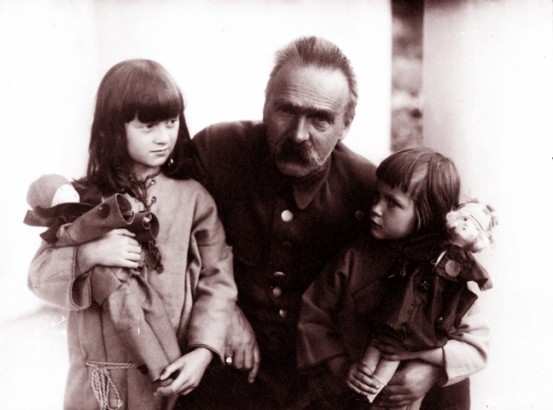

Prezydent Mościcki z wizytą w Nowym Targu, 1929

Prezydent Mościcki z wizytą w Nowym Targu, 1929

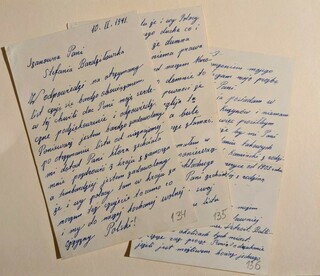

Fascynują mnie czarno-białe, stare fotografie przedstawiające dawne dzieje, w szczególności lubię przeglądać te z okresu po 1918 r. II Rzeczypospolita, to bez wątpienia bardzo ciekawy, choć niełatwy, okres w dziejach Polski. Nasz kraj odrodził się na mapie Europy po wielu latach zaborów. Należało scalić te wielonarodowe i wielowyznaniowe ziemie, którego jeszcze niedawno wchodziły w skład trzech różnych organizmów państwowych. Państwo było ekonomicznie zacofane, funkcjonowały w nim trzy waluty, a istnienie na początku lat 20. było bardzo kruche. Był to okres walki o kształt granic Polski: bohaterskiej obrony Lwowa i walk o Galicję Wschodnią, konfliktu z Czechami o Zaolzie, czas powstań śląskich, powstania wielkopolskiego, oraz wojny polsko-bolszewickiej 1919-1921.

Odbudowa państwa była procesem długotrwałym i trudnym. Pomimo ogromnych trudności z jakimi zmagało się wtedy państwo polskie, bez wątpienia wiele udało się osiągnąć. Pomógł tu niezwykły entuzjazm, który wyzwolił się w zarówno u intelektualnych elit, jak i wśród zwykłych ludzi. W latach 20. przeprowadzono reformę monetarną, która uratowała Polskę przed hiperinflacją, rozpoczęto emisję złotego polskiego. Na poziomie szkoły elementarnej wprowadzono obowiązek powszechnego nauczania, otwarto także szereg nowych uczelni wyższych; min. krakowską Akademię Górniczo-Hutniczą, Uniwersytet Poznański, działalność wznowił Uniwersytet Stefana Batorego w Wilnie.